T-SQL Tuesday #31, hosted by Aaron Nelson this month, asked the SQL community to write about logging. Logging is an open door into many topics and could pertain to error logging, logging with monitoring, SQL Server logs, feature logs, grocery store logs of how much you spent and how you inserted it into SQL Server to average out your spending for a month…you get the picture. Originally, I had written about a different topic but recently, I was asked a question that belongs in the T-SQL Tuesday logging theme and I wanted to share it with everyone.

I was asked recently if an update that updates a column to the same value is sent to the transaction log, essentially causing a dirty page. I don’t usually answer a question with, “It Depends” but this one really does require that as an answer. The question was really good too and I’d like to share it as it will show the value in what we will cover. The question was, “In Merge Replication, will an update on a column being replicated, affect the change count of the article, replicate the change, as well as log the update in the transaction log?”

The one good thing about telling someone, It Depends, for an answer is you can always follow it up with the reasons why it really does. Let’s take a look at the reasons the answer to this question, do ghost records cause logging events, depends on the table that is being updated.

It Depends on…

The structure of a table being updated will make the difference.

- If the update changes the Primary Key – keep in mind composite keys

- If a table is a HEAP table

- If LOB columns are involved

The one thing I do want to point out on the specific question that was asked: even knowing what we will go over will show when a row is logged to the transaction log, the scenarios change slightly when Merge Replication is involved. The reason for this is that Merge Replication relies on triggers that will insert rows into tables that allow replication to monitor and track changes. Those inserts, updates and deletes performed for Merge Replication become logging events themselves. We will not show those scenarios with replication involved because, going over the scenarios themselves will show if the replication events would be logged. I know, that sounded odd but let me explain…

Before I go any further, I want to point out an article written by Paul White, “The Impact of Non-Updating Updates". Paul goes over these scenarios in much more detail than this blog will and is a resource that I’ve used the last few years to return the same answers as I will here. Paul is one the most brilliant SQL Server MVPs I know. When reading his articles, you’ll not only gain answers but a wealth of knowledge into why those answers are uncovered. Paul’s article is where I referred the person that sparked this great conversation as well.

Primary Key

The first reason listed is referring to the existence of a primary key on the table making a difference in if an update, updates the same value that already exists, causes a transaction log entry. The reason here may be quite clear. The primary key is the structure of the data rows so it makes sense that any change, in any way, would require logging to ensure that structure truly has changed or not.

For the examples below, Process Monitor will be used to monitor the ldf file for activity and the undocumented function fn_dblog, will be reviewed to show the activity as it occurs in the transaction log.

Below, the example will run through showing updates referring to a primary key or clustered index that affects logging.

CREATE TABLE tblPrimaryKeyUpdate (

ID1 INT

,ID2 INT

,COLVAL VARCHAR(10) PRIMARY KEY (

ID1

,ID2

)

)

Insert one row into the table.

INSERT INTO tblPrimaryKeyUpdate

SELECT 1

,2

,'Case 1'

We do not need any more data than the one row to complete the test.

Run a CHECKPOINT to ensure everything that was just logged to the transaction log is flushed.

CHECKPOINT

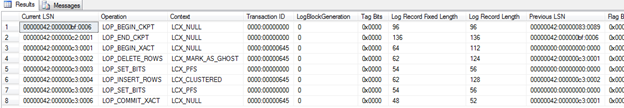

Review the transaction log contents using fn_dblog function.

SELECT * FROM fn_dblog(NULL, NULL)

The above results show the checkpoint and only in the transaction log currently. Now that the transaction log is cleared of any previous changes, the table and row inserted into tblPrimaryKeyUpdate can be updated. In this test, we will update the column, COLVAL to itself.

UPDATE tblPrimaryKeyUpdate

SET COLVAL = COLVAL

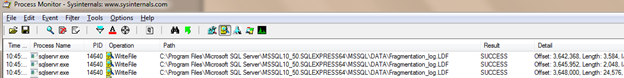

From the results shown in process monitor

We see that there was no activity on the ldf file from the update statement that was executed. Rerun the statement to review the results from fn_dblog. From the results, you’ll see that the transaction log has not logged any new rows. This is due to the update statement not altering either column that is part of the composite key. Next, update one of the ID columns to the same value.

UPDATE tblPrimaryKeyUpdate

SET ID1 = ID1

Rerun fn_dblog again and review the results.

As shown above, the results show the transaction log did in fact have rows inserted into it in order to track the changes to the column ID1. We can see the transaction from the starting LOP_BEGIN_XACT through the LCX_MARK_AS_GHOST, LCX_CLUSTERED and LOP_COMMIT_XACT.

HEAP Table

The second scenario that is taken into account is, is the table a HEAP table? Given the storage of a HEAP table, we could come to the same conclusion as the primary key scenario. Given the changes and the need to retain the fact, the table structure may change; the rows need to be logged.

For example, create a HEAP table as shown below.

CREATE TABLE tblHEAPUpdate (

ID1 INT

,ID2 INT

,COLVAL VARCHAR(10)

)

Use the same insert and update statements from the examples working on tblPrimaryKeyUpdate by changing the table name. Make sure a CHECKPOINT is executed again prior to the update statements.

CREATE TABLE tblHEAPUpdate (

ID1 INT

,ID2 INT

,COLVAL VARCHAR(10)

)

INSERT INTO tblHEAPUpdate

SELECT 1

,2

,'Case 1'

CHECKPOINT

UPDATE tblHEAPUpdate

SET COLVAL = COLVAL

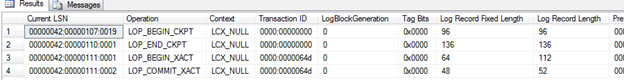

Rerun the select on fn_dblog and review the results.

Reviewing process monitor shows activity on the physical ldf file as well. The results in process monitor also relate back to the update, updating 3 rows all together.

Above, there is a different result based on the table being a HEAP. The update was recorded even knowing it was updating the row and column with the same value.

LOB

The last scenario involves the existence of a LOB column and the update. I am going to let you test that scenario and hope you read Paul’s blog on this very topic and examples. I don’t want this short review of the scenarios to take away from something that went through the scenarios in much more depth. The end result is, if a LOB column update exceeds the 8000 bytes or 4000 Unicode, the event is written to the transaction log.

Summary

Knowing what is being logged, how, and why can be extremely valuable. Reviewing and monitoring these types of situations can help decisions to be made, like the one that prompted this discussion. The information gathered can also be used in transaction log sizing efforts, decisions on when a transaction log backup should be run in a full recovery model, and an overall deeper understanding of how the transaction log is being utilized by SQL Server and the database.